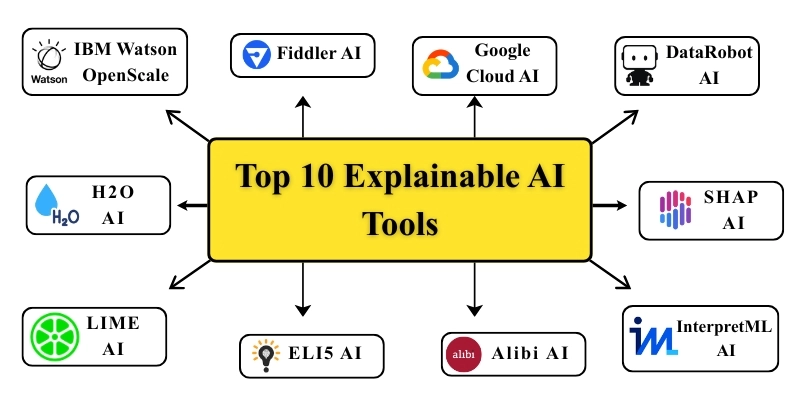

Top 10 Explainable AI Tools: 5 Free & 5 Paid for Better Transparency

Published: 21 Feb 2026

Are you tired of trying to understand complex AI decisions? With so many tools present in the market, it can be difficult to know which one makes things clear for us.

That’s why we’ve collected the Top 10 Explainable AI Tools: 5 paid and 5 free options to help you improve transparency and make AI decisions easier to understand.

Why Explainable AI Matters

It’s becoming harder to understand AI models as they get smarter. In order to build confidence and transparency, Explainable AI (XAI) helps people understand the options that AI makes. This is the reason why it’s important:

- Transparency – Shows why AI made a certain decision.

- Trust – People trust AI more when they understand how it works.

- Accountability – Makes sure AI decisions are safe and acceptable.

- Bias Detection – Helps identify and correct errors in AI models.

With XAI tools, businesses can make sure their AI is not just powerful but also easy to understand.

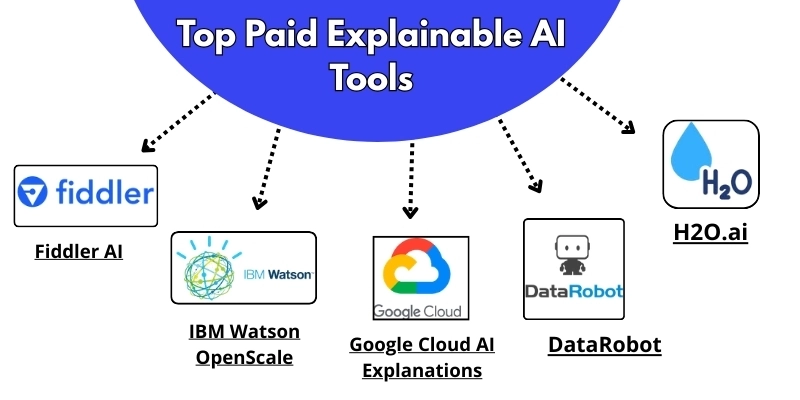

Top Paid Explainable AI Tools

Paid XAI tools have more advanced features, can connect to business operations, and can handle complicated AI models. These tools work best for companies that need strong, flexible options for explainability.

1. IBM Watson OpenScale

Best for: Enterprise-level AI transparency and fairness

Price: Starts at $140/month

IBM Watson OpenScale helps businesses create AI systems that are not only effective but also transparent and accountable. It helps us with real-time monitoring of AI models, detects errors, and provides detailed explanations for predictions made by the AI models.

Key Features:

- Continuous analysis of model performance

- Finding bias and fixing it

- Explainability of model choices in great depth

- Connectivity with IBM Cloud and several third-party apps

Company Info:

- Revenue: US$60-70 billion (IBM overall)

- Employees: 350,000+

- CEO: Arvind Krishna (CEO of IBM)

- Founded: IBM founded in 1911; Watson OpenScale launched in 2018.

- Headquarters: Armonk, New York, USA

- Parent Company: IBM Corporation

2. Fiddler AI

Best for: Real-time explainability and monitoring for businesses

Price: Contact for pricing

Companies can track, explain, and improve the performance of their AI models with Fiddler AI’s strong tool for AI monitoring. It works especially well for teams that need to make sure that their AI-driven choices are fair, legal, and accountable.

Key Features:

- Monitoring and tracking of model results in real time

- Finding and determining bias and fairness

- AI tools that can explain things for authenticity

- Changeable alerts and reports

Company Info:

- Revenue: US$10–20 million annually (estimated)

- Employees: 100+

- CEO: Krishna Gade

- Founded: 2018

- Headquarters: Palo Alto, California, USA

- Parent Company: Fiddler Labs, Inc.

3. Google Cloud AI Explanations

Best for: Scalable explainability for Google Cloud AI models

Price: Starts at $0.01 per prediction (on top of Google Cloud fees)

Google Cloud AI Explanations is a set of tools that help people who are already using Google Cloud for their AI projects acquire a basic sense of machine learning models. It helps people see the things that affect their decisions and choices.

Key Features:

- It’s easy to connect to Google Cloud AI services

- Visualizing how important features are for model predictions

- Automatically making answers

- We can collectively use TensorFlow and other Google AI tools.

Company Info:

- Revenue: US$258 billion (Google overall)

- Employees: 150,000+

- CEO: Sundar Pichai

- Founded: Google Cloud: 2008; Vertex Explainable AI evolved from 2019 AI Explanations.

- Headquarters: Mountain View, California, USA

- Parent Company: Google LLC / Alphabet Inc.

4. DataRobot

Best for: AI model building with explainability and interpretability

Price: Contact for pricing

DataRobot is a business AI tool that lets you not only build strong models but also make them easy to understand. It helps users learn and clarify how models make predictions, which makes it a great choice for companies that care about being accessible and accountable.

Key Features:

- AutoML with tools that let you explain features easily

- Evaluation of AI transparency and accessibility

- Monitoring and findings the model performance

- Connecting to well-known data tools like SAP, Salesforce, and more

Company Info:

- Revenue: US$100 million (estimated)

- Employees: 800+

- CEO: Debanjan Saha

- Founded: 2012

- Headquarters: Boston, Massachusetts, USA

- Parent Company: DataRobot, Inc.

5. H2O.ai

Best for: End-to-end AI platform with explainability tools

Price: Starts at $999/month

There is a set of open-source and business AI tools from H2O.ai that are easy to explain and understand.

Their explainability tool, H2O-3, gives teams deep information about how models behave, which helps them make smart choices about how to apply models for better understanding.

Key Features:

- Free software and business AI models

- Explainability tools for figuring out what a model represents

- Finding bias and figuring out what’s fair

- Adding support for various data platforms

Company Info:

- Revenue: US$50 million annually (estimated)

- Employees: 200+

- CEO: Sri Satish Ambati (Founder & CEO)

- Founded: 2012

- Headquarters: Mountain View, California, USA

- Parent Company: H2O.ai, Inc.

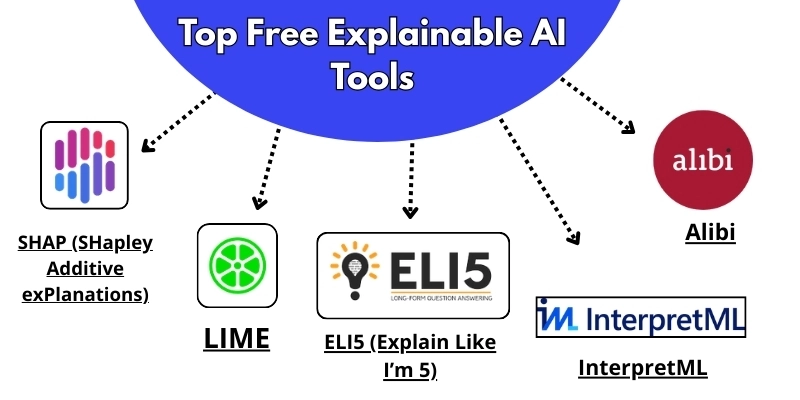

Top Free Explainable AI Tools

Free XAI tools are a good choice for people or businesses with limited budgets. They provide simple but useful features for understanding AI models, but may need some programming skills to use.

The best part about these explainable AI tools is that they’re free to use for some features.

6. SHAP (SHapley Additive exPlanations)

Best for: Feature importance and model interpretability

Price: Free (Open Source)

SHAP is a free program that shows how each model makes judgments based on Shapley values, which are an idea from the field of cooperative game theory.

It’s one of the most popular ways to figure out which parts of a model affect the outcomes it produces.

Key Features:

- See how important each feature is in a model

- Allows many different machine learning methods to be used

- It gives full explanations of each prediction.

- Open source with a lot of community support.

Company Info:

- Revenue: Open-source tool (no company revenue)

- Employees: Community-driven

- Founder: Scott Lundberg

- Founded: 2017

- Headquarters: Global, community-based project.

- Parent Company: Independent open-source project

7. LIME (Local Interpretable Model-agnostic Explanations)

Best for: Local model interpretability

Price: Free (Open Source)

LIME is a free tool that helps explain how an algorithm makes predictions that people can understand easily. It gives you information about how decisions are made for particular scenarios by comparing the black-box model locally with models that can be recognized.

Key Features:

- This tool doesn’t care about the model and works with any machine learning model

- Specifically looks at regional explanations for specific claims

- Visualizations and ease of understanding for complicated models

- Free and open source

Company Info:

- Revenue: Open-source library (no company revenue)

- Employees: Community-driven and maintained by contributors worldwide

- Founder: N/A

- Founded: 2016

- Headquarters: Community-based project

- Parent Company: Independent open-source project

8. ELI5 (Explain Like I’m 5)

Best for: Simple explanations for machine learning models

Price: Free (Open Source)

ELI5 is a free Python library that helps people to explain machine learning models in an easy-to-understand way. It works with scikit-learn models and shows how different features influence the predictions of this model.

Key Features:

- Demonstrations of machine learning models that are simple to understand

- It works with top Python tools for machine learning such as scikit-learn.

- Shows how features are important and how decisions are made

- Free to use open source platform

Company Info:

- Revenue: Open‑source explainability library/tool

- Employees: Community-driven

- Founder: Open-source project, not a company

- Founded: 2017

- Headquarters: open‑source project

- Parent Company: Independent concept

9. InterpretML

Best for: Interpreting machine learning models with built-in explainability features

Price: Free (Open Source)

InterpretML is a free toolkit that provides a detailed explanation of machine learning models. It offers both general and specific explanations of predictions, which makes it useful for understanding how models work.

Key Features:

- Model-independent explainability

- Global and local accessibility aspects

- Compatible with most machine learning libraries.

- Openly available and free to use.

Company Info:

- Revenue: Open-source tool (no company revenue)

- Employees: Community-driven

- Founder: Microsoft Research

- Founded: Released in 2019 by Microsoft Research.

- Headquarters: It doesn’t have a physical HQ

- Parent Company: Supported by Microsoft Research

10. Alibi

Best for: Model-agnostic explainability and fairness analysis

Price: Free (Open Source)

Alibi is a free-to-use tool that gives you predictive models of AI and accurate values that you can understand. It’s useful for industries such as finance and healthcare because users can explain their concerns to these models and check for transparency.

Key Features:

- Provides explanations for any machine learning model

- Tools to check accuracy and detect flaws in AI tools

- Works after the model has been built to explain its decisions.

- Free to use and easy to integrate

Company Info:

- Revenue: Alibi is an open‑source explainable AI library

- Employees: Community-driven

- Founder: Seldon (AI startup)

- Founded: Released in 2019 by Seldon Technologies.

- Headquarters: community‑driven project

- Parent Company: Maintained by SeldonIO with community support

Tips for Choosing the Right Explainable AI Tool

When choosing the best explainable AI tool, keep these things in mind:

- Model Compatibility: Make sure the tool works with the models you’re using.

- Feature Set: Some tools focus on different things such as the importance of their features and accuracy, so pick one that meets your needs.

- Security and Privacy: Check that the tool follows privacy and security rules for sensitive data.

- Integration: Ensure the tool can work with your current setup and platforms.

- Pricing: Free tools are good for small projects, while paid tools offer more advanced features and support.

Final Thoughts

So, tech lovers, it’s time to conclude this blog post! We’ve covered the Top 10 Explainable AI Tools, from paid tools like IBM Watson OpenScale and Fiddler AI to free tools such as SHAP, LIME, and InterpretML.

For new users, I recommend trying a tool like LIME or SHAP because they are easy to use and best for individual researchers or smaller teams. Try one of these tools today and make your AI systems more transparent and ethical!

If you want to explore more about the latest and best AI tools, check out our Tech Tools category.

Common Questions on Explainable AI Tools

Here are some frequently asked questions about Explainable AI Tools to help you understand how they work and why they’re important for our daily lives.

Explainable AI tools for data science help data scientists understand and explain machine learning models. Some commonly used tools include:

- LIME

- SHAP

- InterpretML

These tools provide insight into how models make decisions, allowing users to have confidence in their results and customize models more effectively.

Explainable AI tools assist in making decisions by offering transparent explanations of how algorithms achieve their conclusions.

They help decision-makers understand the aspects that influence outcomes, allowing them to make more educated, data-driven decisions.

AI explainer tools are essential for ensuring ethics and fairness by allowing stakeholders to understand how AI models make decisions.

This transparency makes it easier to find and fix any errors in the model, which ensures that AI systems are trustworthy and appropriate.

Explanatory AI tools used for deep learning include:

- LIME

- SHAP

- Captum

- Xpdeep

- Integrated Gradients

These tools make it easier to understand complicated neural network models by drawing attention to key features and giving information about what the models predicted for the future.

AI explainability tools support visualization by creating visual representations of how AI models make decisions.

Tools like LIME and SHAP provide importance graphs and heatmaps that help users to understand the behavior of the model in a natural and visual way.

Explainable artificial intelligence tools for educational purposes assist instructors and students in understanding how AI models work.

These tools make it easier to understand and use AI in learning situations by allowing you observe the way models make choices and explain the logic behind them.

The best AI tools used for machine learning explainability include:

- LIME

- SHAP

- ELI5

A lot of people use these tools to figure out what machine learning models mean. They show how features affect results and help users make models work better.

Explainable AI tools for computer vision such as Grad-CAM and LIME provide visual explanations of model decisions.

They help to highlight which portions of a picture influence a model’s prediction, which aids model validation and trustworthiness.

An AI explainer tool for legal reasoning helps legal professionals understand how AI models analyze case data.

Tools like LexMachina and ROSS Intelligence provide transparency by explaining how AI derives conclusions based on legal texts and examples.

- Be Respectful

- Stay Relevant

- Stay Positive

- True Feedback

- Encourage Discussion

- Avoid Spamming

- No Fake News

- Don't Copy-Paste

- No Personal Attacks

- Be Respectful

- Stay Relevant

- Stay Positive

- True Feedback

- Encourage Discussion

- Avoid Spamming

- No Fake News

- Don't Copy-Paste

- No Personal Attacks